Uninterrupted by Design: 100% Uptime Through Every Season

Uninterrupted by Design

100% Uptime Through Every Season

February 26, 2026

When winter storms sweep across New Jersey, they often leave behind closed highways, delayed transportation and neighborhoods bracing for potential outages. Snow accumulates along the coast, winds intensify, and communities prepare for disruption.

Inside NJFX’s purpose-built cable landing station and colocation campus, operations continue without pause.

For ten consecutive years, NJFX has maintained 100 percent uptime. Through blizzards, coastal storms, heat waves and extreme weather events, the facility has operated continuously. The record reflects careful engineering and disciplined execution rather than favorable conditions.

Reliability at NJFX is intentional. It is designed into every system and reinforced by a team trained to perform under pressure.

Infrastructure Designed for Continuity

At the foundation of NJFX’s operations is a layered redundancy model. The facility operates with N+1 configuration across critical infrastructure systems. Every essential component has reserve capacity available if the primary system requires support.

Dual utility feeds provide diversified power sources to the campus. On-site generation systems are positioned to engage immediately if external supply is compromised. Power is conditioned and distributed through engineered pathways that eliminate single points of failure.

Environmental systems operate with equal precision. Cooling infrastructure is calibrated to maintain stable performance during fluctuating seasonal conditions. Sensors continuously monitor temperature, humidity and load levels throughout the facility. Real-time data allows operators to detect and address anomalies before they escalate.

Preventative maintenance follows a disciplined schedule. Equipment is tested under load. Systems are inspected routinely. Contingency protocols are reviewed regularly. Preparedness is not seasonal. It is constant.

Operations as a Coordinated System

While infrastructure provides the framework, operational excellence sustains performance.

NJFX maintains a fully staffed, 24-hour on-site operations team. Monitoring platforms run continuously, providing live visibility into power distribution, cooling metrics and system health. Alerts are structured to ensure immediate response if performance thresholds shift.

The team functions with defined roles and cross-trained expertise. Procedures are documented clearly. Communication protocols are established and practiced. Each member understands not only individual responsibilities but also how their work supports the broader system.

When severe weather is forecast, preparations begin early. Backup systems are verified. Fuel levels are confirmed. Staffing rotations are adjusted to guarantee on-site presence regardless of road conditions. Supplies are stocked in advance of anticipated storms.

During recent blizzards that moved through New Jersey, NJFX remained fully operational. While travel advisories were issued across the region, the campus stayed accessible. Driveways and walkways were cleared consistently. Access control systems remained active. Security operations continued without interruption.

Customers who could not travel safely relied on NJFX’s remote hands services. On-site technicians performed equipment inspections, verified connections and executed tasks on behalf of client teams. This approach minimized risk for customers while maintaining continuity of service.

The coordination between systems and personnel operates with precision. Each process supports the next. Monitoring informs action. Redundancy supports stability. The team executes with discipline.

A Decade of Performance Under Pressure

Ten years of uninterrupted uptime in critical infrastructure is a measurable achievement. In an environment where seconds of downtime can affect financial markets, cloud platforms and enterprise networks, consistency carries weight.

NJFX’s resilience has been tested repeatedly by seasonal extremes along the Atlantic coast. Heavy snowfall, ice accumulation and high winds have challenged regional utilities and transportation systems. Inside the facility, operations have remained stable.

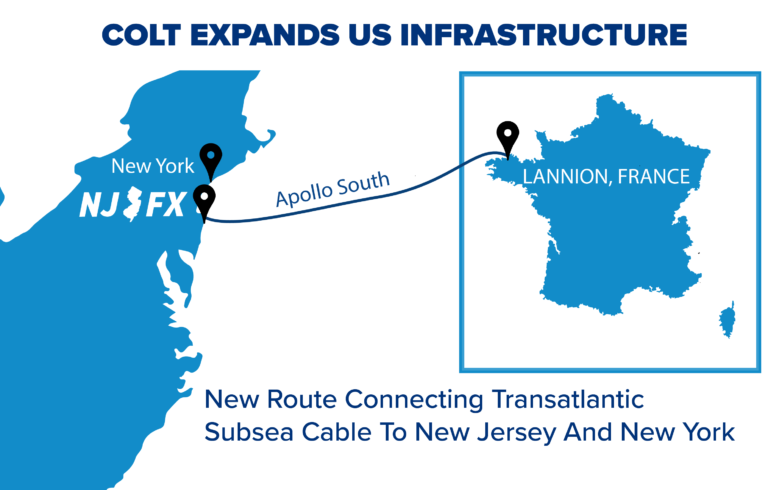

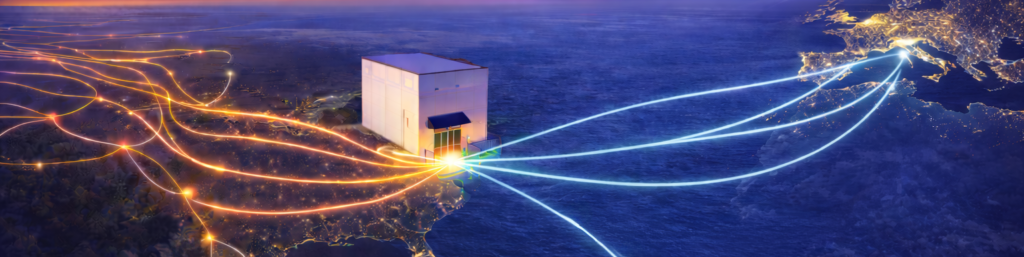

Connectivity moves through NJFX regardless of conditions outside. Subsea cables converge with terrestrial networks at the site, carrying traffic across continents and throughout the United States. Enterprises depend on that continuity.

The reliability is not situational. It is structural and operational.

Never Down

The NJFX campus may appear quiet from the outside, particularly during a snowstorm. Inside, systems continue running with precision. Power flows through redundant paths. Cooling systems maintain environmental stability. Monitoring screens remain active around the clock.

Infrastructure and team operate as one coordinated unit.

For ten years, through every season and under every condition, NJFX has delivered uninterrupted performance. The weather may shift. The demand for connectivity may increase. The standard remains the same.

NJFX operates without interruption.

Latest News & Updates

Stay informed with the latest press releases, industry news, and more.

Uninterrupted by Design: 100% Uptime Through Every Season Read More »