Why operators and enterprises will need an AI data center strategy

Ivo Ivanov (CEO at DE-CIX), Data Center Dynamics

February 1, 2024

As Mobile World Congress (MWC) 2024 draws near, the integration and impact of artificial intelligence (AI) in our digital economy cannot be overstated.

AI has always been a hot topic in the mobile industry, but this year it’s more than just an emerging trend; it’s a central pillar in the evolving landscape of telecommunications.

The democratization of generative AI tools such as ChatGPT and PaLM, and the sheer availability of high-performance Large Language Models (LLMs) and machine learning algorithms, means that digital players are now queuing up to explore their value and potential use cases.

The race to uncover and extract this value means that many market participants are now getting directly involved in using or building digital infrastructure.

The likes of Apple and Netflix walked this path almost a decade ago, and now banks, automotive companies, logistics enterprises, fintech operators, retailers, and healthcare specialists are all embarking on the same journey. The benefits are simply too good to pass up.

Crucially, we’re not just talking about enterprises owning a bit of code or developing new AI use cases; we’re talking about these companies having a genuine stake in the infrastructure they’re using. That means their attention is turning to things like data sovereignty, network performance, latency, security, and connection speed. They need to make sure that the AI use cases they’re pursuing are going to be well accommodated long into the future.

The need for network controllability

Enterprises are no longer mere spectators in the AI arena; they are active stakeholders in the infrastructure that powers their AI applications.

For instance, a retail company employing AI for personalized customer experiences must command not only the algorithms but also the underlying data handling and processing frameworks to ensure real-time, effective customer engagement.

This shift toward controllability underscores the importance of data security, compliance adaptability, and operational customization.

It’s about having the capability to quickly adjust to evolving market demands and regulatory environments, as well as optimizing systems for peak performance.

In essence, controllability is becoming a fundamental requirement for enterprises, signifying a shift from passive participation to proactive management in the network landscape.

Low latency is no longer optional

In the high-stakes world of AI, where milliseconds can determine outcomes, latency becomes a make-or-break element.

For example, in the financial sector, where AI is used for high-frequency trading, even a slight delay in data processing can result in significant performance losses. Similarly, for healthcare providers using AI for real-time patient monitoring, latency directly impacts the quality of care and patient outcomes.

Enterprises are therefore prioritizing low-latency networks to ensure that their AI applications function at optimal efficiency and accuracy. This focus on reducing latency is about more than speed; it’s about creating a seamless, responsive experience for end-users and maintaining a competitive edge in an increasingly AI-driven market.

As AI technologies continue to advance, the ability of enterprises to manage and minimize latency will become a key factor in harnessing the full potential of these innovations.

Localization will become mission-critical

Previously only talked about in the context of content delivery networks (CDNs) and cloud models, localization now plays a crucial role in AI performance and compliance. A striking example of this is Dubai’s journey in localizing Internet routes.

From almost no local Internet routes a decade ago to achieving 90 percent today, Dubai has dramatically reduced latency from 200 milliseconds to a mere three milliseconds for accessing global content.

This shift highlights the performance benefits of localization, but there are legal imperatives too. With regions like Europe and India enforcing strict data sovereignty laws, managing data correctly within specific jurisdictions has become more important as data volumes have increased.

The deployment of AI models, and by proxy the networks accommodating them, must therefore align with local market needs, demanding a sophisticated level of localization that businesses are now paying attention to.

Multi-cloud interoperability

AI is also reshaping how enterprises approach cloud computing, especially in the context of multi-cloud environments. AI’s intensive training and processing often occur within a specific cloud infrastructure.

Yet, the ecosystem is more intricate, as numerous applications are either feeding data to, or utilizing data from, these AI models are likely distributed across different cloud platforms.

This scenario underscores the critical need for seamless interoperability and low-latency communication between these cloud environments.

A robust multi-cloud strategy, therefore, isn’t just about leveraging diverse cloud services; it’s about ensuring these services work in harmony as they facilitate AI operations.

All of these factors; controllability, latency, localization, and cloud interoperability will become increasingly important to enterprises as use cases develop. Take self-driving cars for instance. Latency and the real-time exchange of data are obviously critical here, but so are cloud interoperability and data sovereignty.

A business cannot serve an AI-powered driver assistance system from one region if the car is in another. These systems also learn and adapt to individual driving patterns, and handle sensitive personal information, making compliance with regulations like the General Data Protection Regulation (GDPR) in the EU not just a legal obligation but a trust-building imperative.

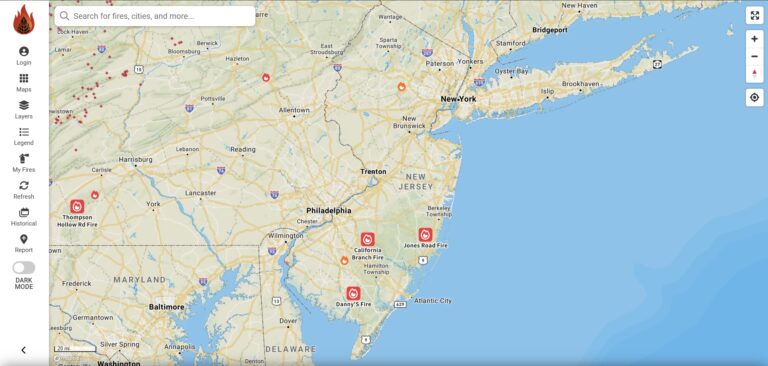

Networking and interconnections

If data center operators want to win business from these AI-hungry, data-driven enterprises, they need to move their focus beyond mere servers, power, and cooling space.

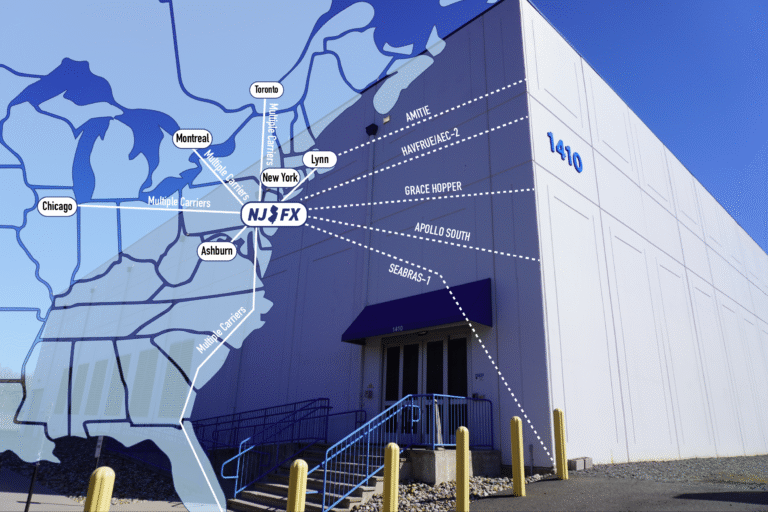

Forward-looking data centers are now evolving to support their enterprise customers more effectively by providing direct connectivity to cloud services.

This is ideally achieved through housing or providing direct access to interconnection platforms in the form of an Internet Exchange (IX) and/or Cloud Exchange.

This will allow different networks to interconnect and exchange traffic directly and efficiently, bypassing the public Internet, which reduces latency, improves bandwidth, and enhances overall network performance and security.

Enterprises are more invested than ever in the connectivity infrastructure powering their services, and to win customers, data centers are going to need to take a more collaborative and customizable approach to data handling and delivery.

This isn’t just a response to immediate challenges; it’s a proactive blueprint for a future where AI’s potential is fully realized.

Latest News & Updates

Stay informed with the latest press releases, industry news, and more.