All Hazard Preparation, Safety, Recovery Training (Cyberattack)

How All-Hazard Safety and Recovery Training Strengthens Our Defense Against Cyberattacks

learn how all-hazard training modules—focused on preparation, safety, and recovery—are equipping teams with the skills and awareness needed to prevent disruptions, protect public safety, and ensure rapid response during digital crises.

Strengthening Our Digital Frontlines: The Importance of Cyber Preparedness, Safety, and Recovery

As Cybersecurity Awareness Month continues, NJFX is underscoring the critical importance of preparedness, safety, and recovery practices when facing cyber threats. Our team has been diligently completing individual cybersecurity training modules, and we are proud to share that we are nearing 100% compliance. This proactive approach reflects our commitment to protecting the vital infrastructure and communities we serve.

Why Cybersecurity Matters More Than Ever

Cyberattacks have evolved in scale, frequency, and sophistication. Organizations supporting digital communication infrastructure—like data centers, subsea cable landing stations, and network interconnection points—are increasingly being targeted. These assets are foundational to everything from global financial transactions to emergency response communications.

In New Jersey, the Communications Sector plays a vital role in national connectivity, linking domestic networks to international subsea routes that carry the world’s data. A disruption in this ecosystem has the potential to impact millions of people and organizations across the region and beyond.

The Impact of Cyberattacks on Critical Infrastructure

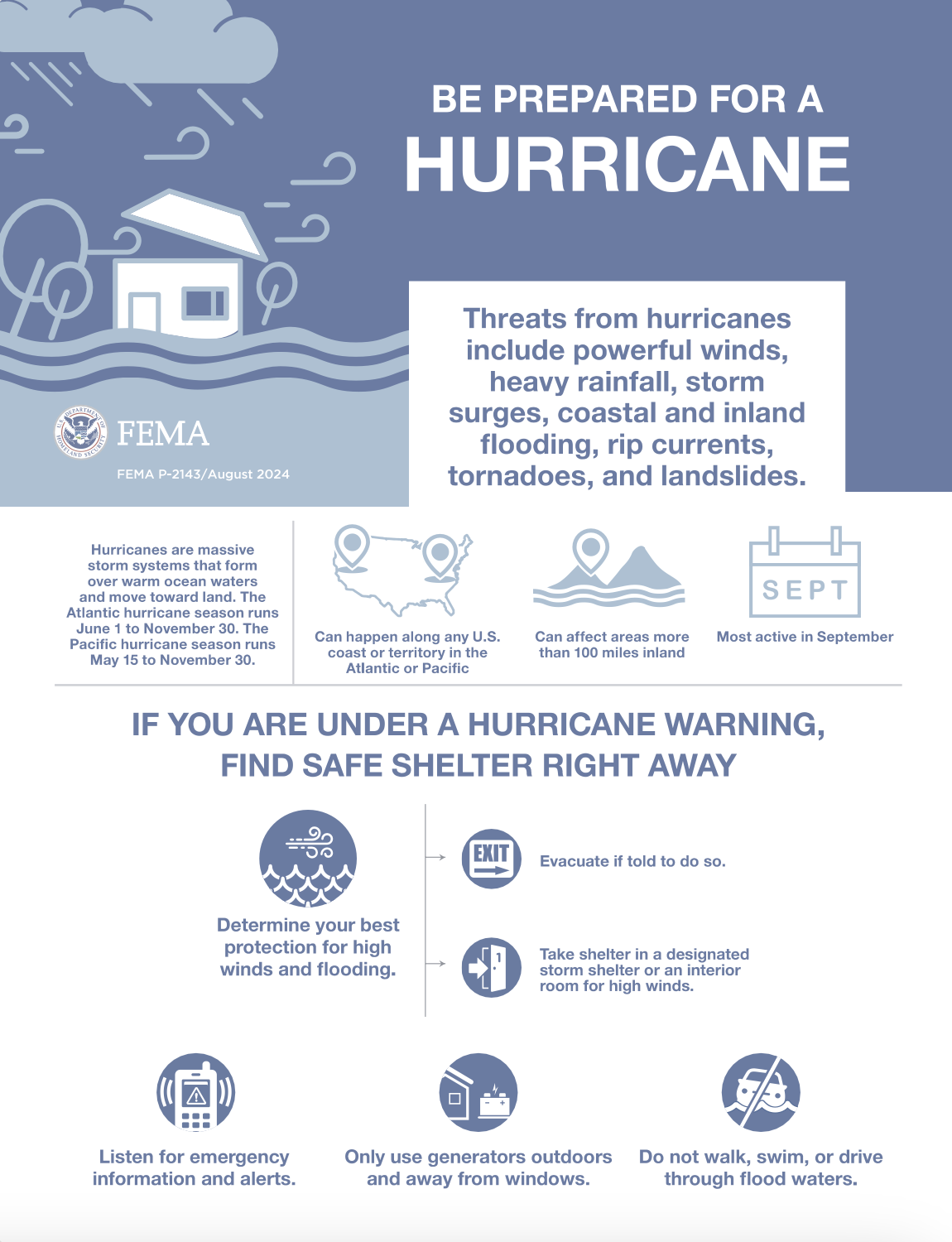

When malicious actors launch cyberattacks—whether ransomware, distributed denial of service (DDoS), phishing campaigns, or system intrusions—the consequences can be significant:

Service Disruptions & Outages: Interrupting connectivity can halt operations, restrict communication, and impact essential services like hospitals and emergency management.

Financial & Operational Losses: A breach can lead to costly recovery efforts, system repairs, and reputational damage.

Data Integrity & Security Risks: Unauthorized access can compromise sensitive data, customer information, and mission-critical systems.

Cascading Regional or National Impacts: Because networks are interconnected, a cyber incident at one facility can spread, affecting broader infrastructure ecosystems.

This is especially relevant for facilities like cable landing stations and carrier-neutral interconnection hubs, where domestic and global networks converge. An attack at one point of interconnection can trigger far-reaching disruptions.

Preparation, Safety, and Recovery: A Resilient Approach

At NJFX, cybersecurity is not just a compliance requirement—it’s a culture of vigilance. Our approach is built on three key pillars:

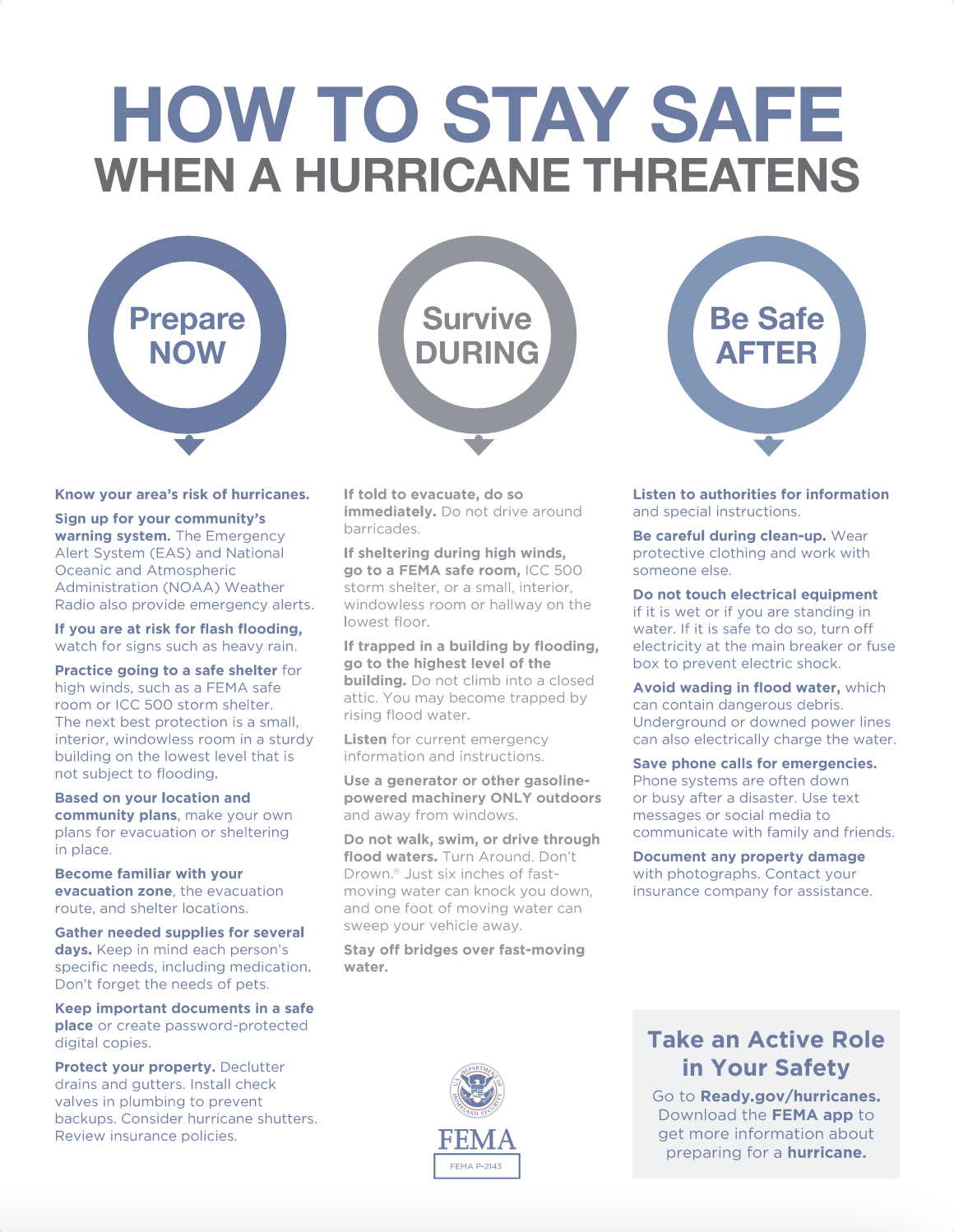

1. Preparation

Ongoing employee training and awareness programs

Updated security protocols aligned with industry standards

Regular vulnerability assessments and proactive network monitoring

2. Safety

Strong access control measures and 24/7 security operations

Physical security integration with digital threat protection

Strict procedures for managing and maintaining customer equipment and systems

3. Recovery

Comprehensive incident response planning

Cross-team coordination to minimize downtime

Redundant pathways and diverse connectivity options to maintain resilience even during disruptive events

By reinforcing these measures, we help ensure that the communications infrastructure supporting regional, national, and global networks remains secure and reliable.

Latest News & Updates

Stay informed with the latest press releases, industry news, and more.

All Hazard Preparation, Safety, Recovery Training (Cyberattack) Read More »