All Hazard Preparation, Safety, Recovery Training (Gray Zone)

Gray Zone Threats

February 14, 2026

Gray Zone Threats (GZT) refer to ambiguous, malicious activities that fall short of open warfare but undermine stability and integrity, often involving cyberattacks, disinformation, espionage, or covert operations. In New Jersey’s Communications Sector, these threats pose significant risks to critical infrastructure, including telecommunications networks, internet services, and emergency communication systems.

Impact on Critical Infrastructure:

Disruption of Communications: GZTs can compromise or disrupt communication channels, hindering emergency response and coordination during crises.

Cyberattacks and Espionage: Malicious actors may infiltrate network systems to steal sensitive information or disable critical services, creating vulnerabilities that can be exploited further.

Disinformation Campaigns: Spread of false information can undermine public trust and interfere with operational decision-making.

Undermining Confidence: Persistent gray zone activities erode confidence in communication systems’ resilience, potentially destabilizing social and economic stability.

Mitigation Strategies:

- Strengthening cybersecurity defenses.

- Enhancing cooperation between government and private sector.

- Implementing robust monitoring and response plans.

- Promoting resilience and redundancy in communication networks.

Overall, Gray Zone Threats challenge the security and reliability of New Jersey’s Communications Sector, requiring vigilant, adaptive, and coordinated efforts to safeguard critical infrastructure.

Please see below Gray Zone Threats, including examples:

Gray Zone threats refer to strategies and activities that lie between traditional war and peaceful competition. They are characterized by ambiguity, deniability, and often involve non-military tools to achieve strategic objectives without triggering a full-scale conflict.

Overview of Gray Zone Threats

- Definition: Actions intended to erode stability or influence an opponent without crossing the threshold of open warfare. They often involve cyberattacks, disinformation, economic pressure, and covert operations.

- Characteristics:

- Ambiguous attribution

- Plausible deniability for actors involved

- Use of asymmetric tactics

- Slow, persistent, and adaptable operations

Application to Critical Infrastructure (CI)

- Threats to CI include cyberattacks on communications, water systems, energy grids, transportation, and healthcare infrastructure.

- Gray Zone tactics may involve:

- Cyber intrusions causing disruptions or damage

- Supply chain interference

- Disinformation campaigns to undermine public trust

- Covert influence operations targeting policy or operational decision-makers

Application to the Communications Sector

- The communications sector is critical for societal functioning, making it a prime target.

- Gray Zone tactics include:

- Disrupting or degrading communication networks via cyber means

- Spreading misinformation or disinformation through social media and other platforms

- Exploiting vulnerabilities in telecommunications infrastructure

- Using clandestine influence campaigns to sway public opinion or political outcomes

Implications

- These threats challenge traditional detection and attribution methods.

- Need for robust cybersecurity, intelligence sharing, and resilience planning.

- Emphasis on understanding and countering ambiguous threats to protect national security and public safety.

Current Gray Zone threats facing the United States encompass a range of activities aimed at undermining national security, economic stability, and societal cohesion without provoking full-scale conflict. Key threats include:

- Cyberattacks and Cyberespionage

- State-sponsored hacking campaigns targeting government agencies, critical infrastructure, and private sector entities.

- Examples include intrusion attempts on energy grids, financial systems, and supply chains.

- Disinformation and Influence Campaigns

- Efforts by Peer or Near-Peer Adversaries (Russai, China, Iran) to spread misinformation via social media platforms.

- Aimed at sowing discord, influencing elections, and eroding public trust.

- Economic Coercion and Sanctions Evasion

- Use of economic pressure, sanctions, and covert financial activities to influence U.S. policy.

- Activities like cryptocurrency-based money laundering to evade detection.

- Covert Operations and Espionage

- Attempts to clandestinely gather intelligence or influence policy through agents, private allies, or proxy groups.

- Maritime and Territorial Ambitions

- Near Peer (China) actions in the Indo-Pacific, involve gray zone tactics like reclamation and harassment without direct military confrontation.

- Information Warfare

- Manipulating online narratives, promoting propaganda, and leveraging social divisions to weaken societal cohesion.

- Biological and Environmental Manipulation

- Potential manipulation of environmental factors or biological agents to create instability or influence regions indirectly.

These threats often blend military, intelligence, cyber, economic, and informational domains, making them complex and challenging to counter. The U.S. government continues to enhance its resilience and detection capabilities to address these evolving Gray Zone activities.

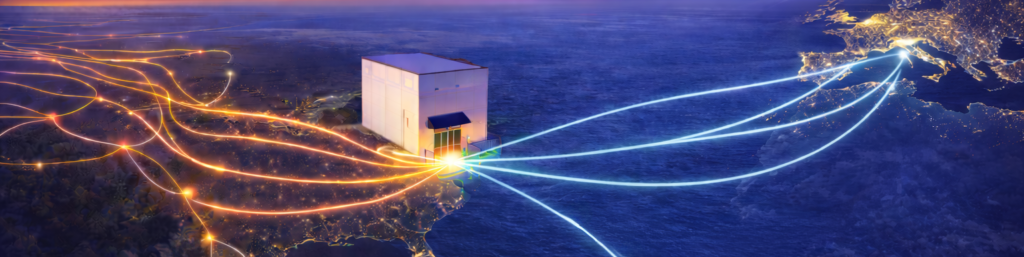

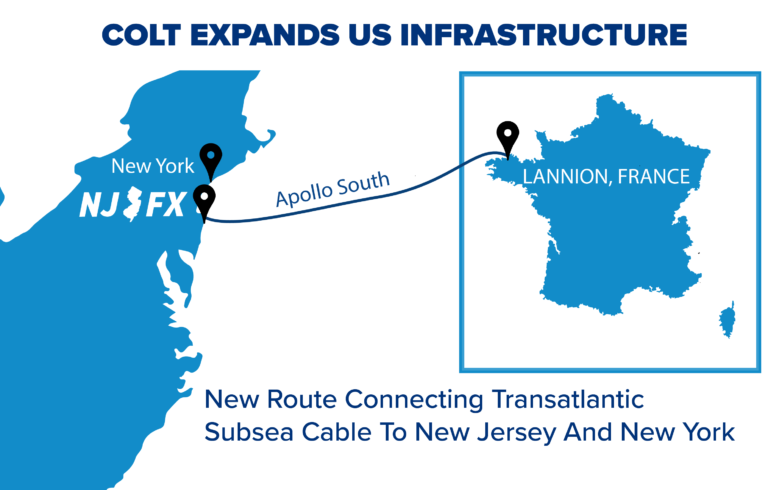

Gray Zone threats to Subsea Infrastructure and Cable Landing Stations (CLSs)

- Remain particularly concerning due to their critical role in global communications, economic stability, and national security. These threats leverage ambiguity, deniability, and asymmetric tactics to undermine or disrupt these vital assets without triggering traditional military responses. A detailed overview includes:

- Cyberattacks on Sub-Sea Infrastructure and CLSs

- Targeted Cyber Intrusions: Adversaries may attempt to infiltrate network management systems of subsea cables and landing stations, causing disruptions, data interception, or sabotage.

- Supply Chain Exploitation: Compromising hardware or software components during manufacturing or deployment to introduce vulnerabilities.

- Advanced Persistent Threats (APTs): State-sponsored groups could establish long-term access to monitor traffic or prepare for future disruptions, blending espionage with potential sabotage.

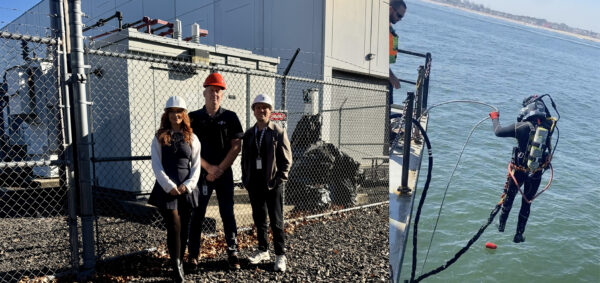

- Covert Physical Operations

- Undersea Cable Tampering or Sabotage: Gray Zone actors might engage in clandestine cutting, tapping, or interference with subsea cables, often under cover of darkness or through disguised vessels, aiming to degrade communications without immediate attribution.

- Vessel Incursions or Harassment: Disguised or non-military vessels may loiter near cable landing sites or anchor points, gathering intelligence or attempting physical interference.

- Disinformation and Influence Campaigns

- Misleading Narratives: Propaganda to undermine confidence in the security or reliability of subsea communications, potentially creating panic or mistrust.

- Operational Deception: Disinformation about the state of infrastructure or the presence of threats, complicating detection and response efforts.

- Exploitation of Vulnerabilities

- Network Vulnerabilities: Use of cyber exploits targeting known weaknesses in SCADA (Supervisory Control and Data Acquisition) or other control systems managing cable operations.

- Legitimacy Exploitation: Leveraging legal or diplomatic channels to create plausible deniability or delays in response, e.g., claiming routine maintenance or environmental concerns.

- Economic and Strategic Leverage

- Restricting or Disabling Critical Links: Gray Zone actors might threaten or partially disable subsea cables to exert economic or political pressure, even if overtly denying involvement.

- Influence in Policy and Decision-Making: Using cyber and influence tactics to sway regulatory or strategic decisions affecting subsea infrastructure.

Implications and Countermeasures:

- Enhanced Cybersecurity: Robust encryption, intrusion detection systems, continuous monitoring, and regular audits of infrastructure management systems.

- Physical Security & Surveillance: Deployment of underwater sensors, maritime patrols, and vessel monitoring around key cable landing sites.

- International Cooperation: Sharing intelligence, best practices, and coordinated responses among nations and private operators.

- Resilience Planning: Diversification of routes, redundant systems, and rapid repair capabilities to minimize impact from Gray Zone disruptions.

- Attribution and Response Readiness: Developing capabilities to attribute attacks accurately and respond proportionally within the Gray Zone framework to deter future activities.

In summary, Gray Zone threats to Subsea Infrastructure and Cable Landing Stations are multifaceted, involving cyber, physical, informational, and strategic tactics. Addressing these requires a comprehensive, layered approach encompassing technological, diplomatic, and operational measures to safeguard these critical components of global communications.

Overall Analysis:

There are many recent examples of how Gray Zone tactics are employed across multiple domains and are often interconnected—cyber operations complement disinformation efforts, and economic pressures support territorial ambitions. They are designed to undermine U.S. influence, destabilize societal cohesion, and assert strategic advantages without provoking direct military conflict. The ongoing evolution of these threats requires comprehensive resilience, advanced detection, and international cooperation.

Latest News & Updates

Stay informed with the latest press releases, industry news, and more.

All Hazard Preparation, Safety, Recovery Training (Gray Zone) Read More »