At the recent DatacenterDynamics Connect conference in New York, industry experts convened for an insightful debate on a critical question: “Can New York’s network infrastructure still handle the data surge?” The discussion featured a distinguished panel moderated by Stephen Worn, CTO and Managing Director, NAM, DatacenterDynamics.

Panelists:

- Arthur Valhuerdi, Chief Technology Officer at Hudson Interxchange,

- Jeff Uphues, CEO of DC BLOX

- Phillip Koblence, Co-Founder and Chief Operating Officer at New York Internet (NYI)

- Felix Seda, General Manager at NJFX

This esteemed panel addressed the current state and future readiness of New York’s digital infrastructure amid rapidly increasing data demands, particularly driven by advancements in artificial intelligence, edge computing, and hyperscale expansions.

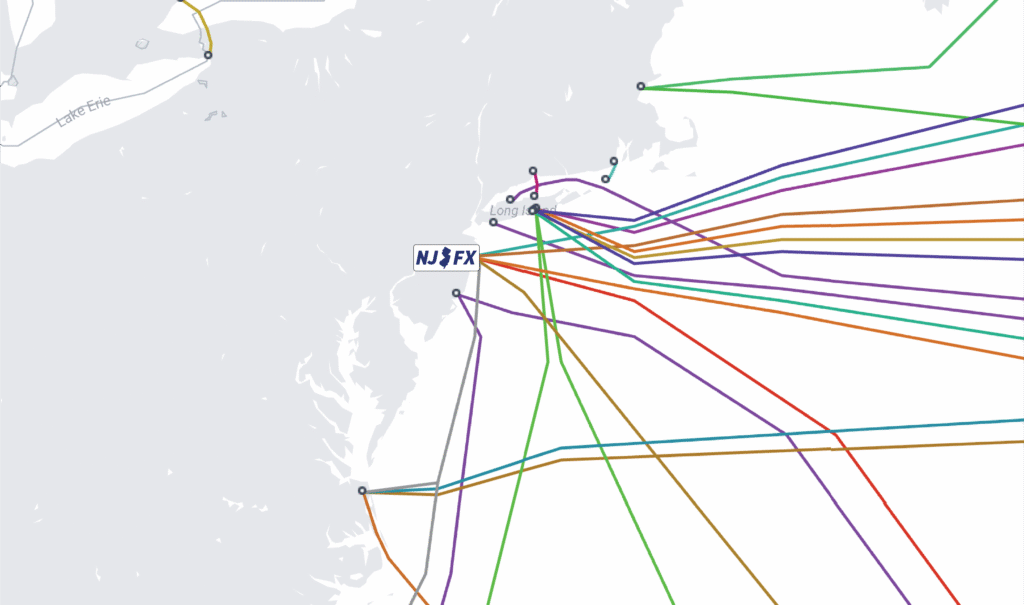

The Shifting Subsea Cable Landscape

Moderator Stephen Worn opened the discussion by addressing a common industry misconception, asking Felix Seda, “How many people still think that subsea cables land directly in Manhattan or New York City?”

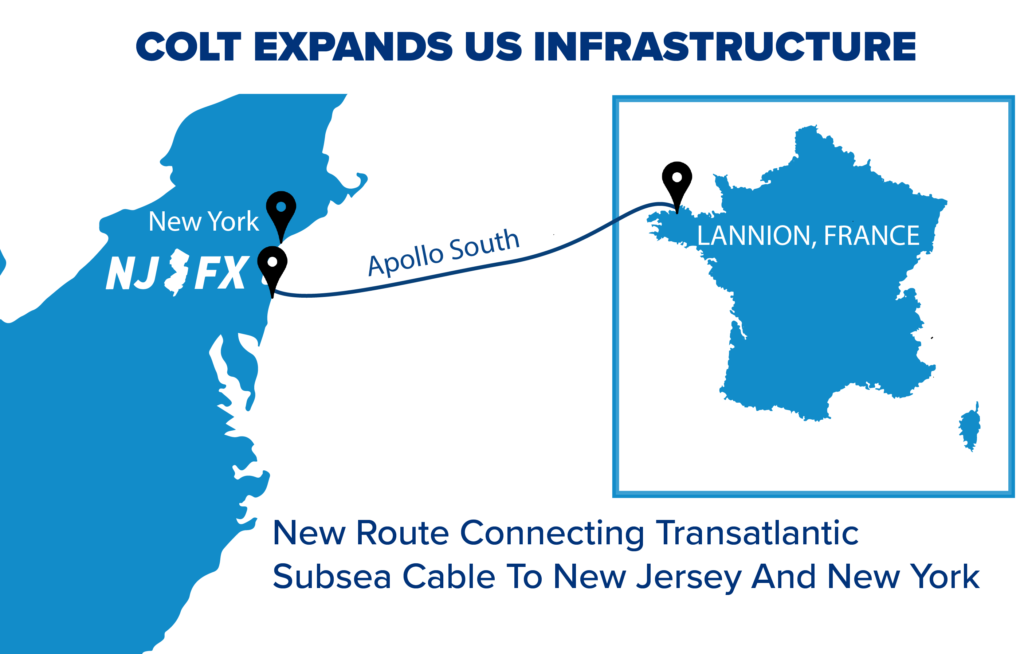

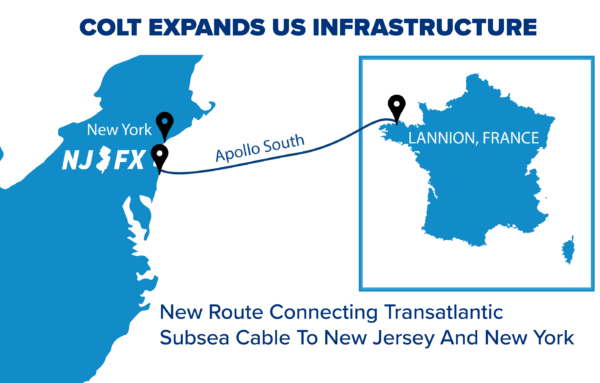

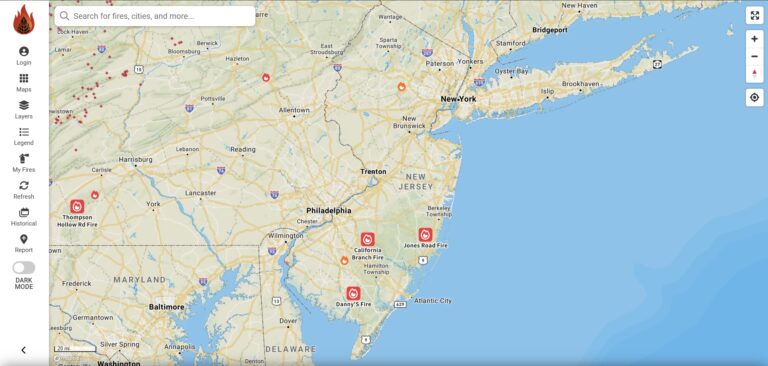

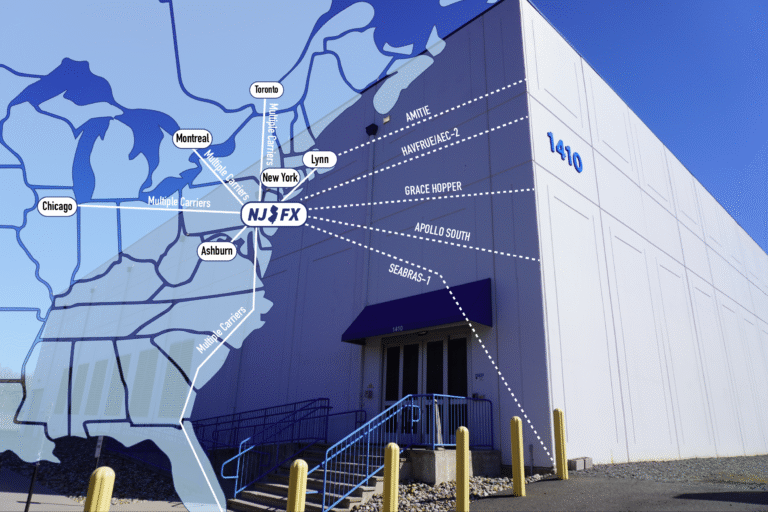

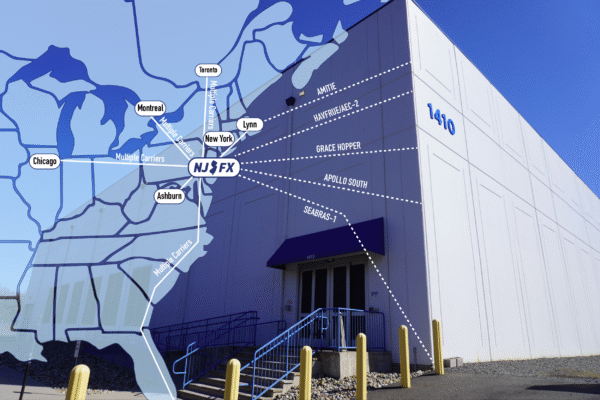

Felix Seda responded, “Historically, yes, subsea cables often landed in New York, but the subsea landscape has dramatically shifted.” He noted that now, cables increasingly land in alternative locations such as New Jersey, Virginia Beach, and Myrtle Beach.

Seda highlighted the critical role of education in correcting these misunderstandings, stating, “We speak regularly with international ISPs who mistakenly believe cables land directly in New York City. One subsea cable lands at NJFX that connects directly to Brazil, bypassing Florida entirely. Educating networks about these physical assets and their true locations is essential.”

Data Surge at the Edge

Phillip Koblence, Co-Founder and COO of NYI, joined the conversation by affirming the centrality of New York in global connectivity despite infrastructure shifts. “The answer to whether New York can handle the data surge is unequivocally yes,” Koblence stated. “Data generated at the edge through technologies like AI, IoT, and inferencing continues to rely heavily on connectivity hubs.”

Arthur Valhuerdi, CTO of Hudson Interxchange, also expressed confidence in New York’s infrastructure readiness, emphasizing high-density capacity expansions: “We’re preparing for significant growth by developing cabinets capable of supporting up to 50kw per rack, specifically to handle emerging inference workloads.” Valhuerdi further noted, “Connectivity requirements within data centers are increasing substantially, with racks now needing upwards of 1,028 fiber connections each. This number is expected to rise further, driving continuous enhancements to our infrastructure.”

Jeff Uphues, CEO of DC BLOX, expanded on the conversation by highlighting hyper-scale growth across the Southeast. “We focus on hyper-scale data centers interconnected by long-haul fiber networks,” Uphues stated, citing examples such as a cable landing station in Burlington, South Carolina, connected via 465 miles of fiber to Atlanta.

Jeff Uphues, CEO of DC BLOX, expanded on the conversation by highlighting hyper-scale growth across the Southeast. “We focus on hyper-scale data centers interconnected by long-haul fiber networks,” Uphues stated, citing examples such as a cable landing station in Burlington, South Carolina, connected via 465 miles of fiber to Atlanta.

“Three subsea cables currently land in Myrtle Beach, with two more planned,” Uphues said. “One of these cables, a 500-terabit link between Santander, Spain, and Myrtle Beach, illustrates how subsea infrastructure drives regional connectivity.”

Uphues emphasized the escalating demand for infrastructure, noting, “One client requires 180-plus reels of fiber per megawatt. We’re building facilities with capacities exceeding 200 megawatts each, illustrating the massive scale and fiber density now demanded in our designs.”

He concluded by underscoring the interdependence of regional growth, adding, “The more infrastructure we build in the Southeast, the greater the need for mature connectivity to major hubs like New York City. This symbiotic growth underscores that it’s not an either-or situation but a collaborative expansion of network infrastructure.”

Phillip Koblence further elaborated on the industry’s maturity, emphasizing diverse needs within digital infrastructure. “The more the industry grows, the greater the overall need for connectivity,” Koblence explained. “It’s critical to understand that digital infrastructure isn’t one monolith; different applications and facilities require specific infrastructural solutions.”

Worn then posed a question about industry maturity to Koblence, asking, “how prepared are today’s networks to support tomorrow’s data-driven world?”

Infrastructure Maturity

Koblence responded, “It varies greatly across verticals. The New York market benefits from longstanding infrastructure laid decades ago, which continues to support modern internet traffic. Despite newer builds, much of the initial groundwork remains highly relevant due to advancements in fiber optic technologies.”

Jeff Uphues provided insight into infrastructure developments in the Southeast, noting, “Unlike New York, we don’t deal extensively with legacy copper infrastructure. Our facilities and networks are entirely new builds, allowing us to leapfrog many of the legacy challenges.”

He expanded, “I was part of MCI’s local service team that helped build out the early internet infrastructure—OC-2, OC-3, OC-12, OC-48 rings, and the first phase of lighting buildings with fiber. What we’re seeing now is the remaking of those early peering points. Hyperscale customers are heading to places with available land and power, where they can build large-scale campuses. These regional interconnection points are tied back into core data centers. It starts with the network,” he added. “Today, we’re talking about thousands and thousands of fiber slices in a facility. These are connected to the major hubs and are remapping the backbone of the internet.”

Felix Seda pointing to a notable shift in enterprise behavior, “Hyperscalers saw this coming. Enterprise customers were behind. They used to be fine letting a backup provider manage their capacity. Now they want control. They want to understand how their network connects directly off the subsea cable and how they can physically route that data to places like Virginia, Chicago, or New York City.”

Seda emphasized, “It’s about having physical overlay and true visibility into the routes their traffic takes. That shift in mindset is driving new infrastructure strategies for enterprise customers across the board.”

Building for the Next Wave

As the panel neared its conclusion, Worn asked a critical forward-looking question: “What’s next? How are you preparing for the next wave, especially in light of unexpected shifts like the Somerset, New Jersey explosion following the stock exchange’s move?”

Felix Seda responded with a focus on control and resilience. “It’s about mitigating risk,” he said. “At NJFX, we’re building infrastructure that increases bandwidth while eliminating middlemen. We’ve acquired the four physical beach conduits from SubCom—owning that last-mile infrastructure gives networks the control they need and avoids chokepoints in legacy hubs like 60 Hudson.”

Seda explained the impact of this ownership model: “By converging physical assets—wavelengths, dark fiber, and direct subsea access—we’re enabling more resilient, congestion-free connectivity paths. Some traffic still needs to go to New York, but increasingly, we’re seeing high-compute workloads move directly from places like Norway into NJFX.”

Phillip Koblence echoed the importance of end-to-end solutions: “We’re trying to get away from calling ourselves just a facility. We’re a facilitator—part of a broader solution. It’s not the customer’s job to figure out how to dig a trench to the beachhead. We’re solving for that so they can focus on what they do best.”

He added a humorous aside: “You can’t sell yourself as ‘New York’s best kept secret.’ That’s why Century 21 went out of business. You need to communicate value clearly—especially when you’re providing critical access like we do with our conduit system at 60 Hudson.”

Koblence also noted their exploration of quantum technologies: “The first wave of quantum commercialization will happen at the network layer—specifically in encryption. And it will rely on dark fiber. You can’t run those workloads on traditional lit networks. We’re laying the groundwork now, even if mass adoption is five years away.”

Ultimately, both leaders emphasized the same principle: supporting innovation by understanding customer pain points and delivering tailored infrastructure that scales with demand. “We’re seeing smarter customers. They know what needs to stay in New York and what can move south to take advantage of power, tax incentives, and real estate. That level of understanding is a big evolution.”

Koblence added, “You can’t separate metro hubs from expansion regions. The growth is mutual and interconnected—both have roles to play in supporting the next wave of data infrastructure demands.”

Final Thoughts: Capacity, Continuity, and Commitment

Arthur Valhuerdi wrapped the session with a focus on short-term readiness: “We’re focused right now on our high-density product—just seeing what kind of customers are coming and where demand is headed. We’ve got seven megawatts still to deploy and may dedicate an entire floor to high-density if that need materializes.”

Even if inference engines don’t fully materialize in the short term, Valhuerdi believes demand is inevitable. “Whether it’s 600kW cabinets or traditional workloads, all of this capacity will be absorbed. The internet isn’t a fad anymore—it’s foundational.”

He underscored that his goal remains simple: meet the needs of customers, whatever their content or application. “Especially at sites like 60 Hudson, 165 Halsey, and One Wilshire—it’s all about connectivity.”

Jeff Uphues shared a long-term view: “Eight years ago, we set out to build a dozen data centers across the Southeast within a decade. We’ve completed or have in development ten so far.”

He emphasized that their mission has remained consistent: “We’re focused on building network-centric infrastructure—interconnecting hyperscale edge nodes and data centers with a robust fiber backbone. We’ll continue to invest in subsea infrastructure and long-haul fiber.”

Reflecting on the company’s progress, Uphues said, “It hasn’t always played out in the exact way or timeline we envisioned, but our focus never wavered. It’s all about where we win, how we best serve our customers, and staying relentlessly committed to our strategy.”

With that, the panel concluded—each expert reinforcing the evolving nature of digital infrastructure and the collaborative responsibility to shape its future

Jeff Uphues, CEO of DC BLOX, expanded on the conversation by highlighting hyper-scale growth across the Southeast. “We focus on hyper-scale data centers interconnected by long-haul fiber networks,” Uphues stated, citing examples such as a cable landing station in Burlington, South Carolina, connected via 465 miles of fiber to Atlanta.

Jeff Uphues, CEO of DC BLOX, expanded on the conversation by highlighting hyper-scale growth across the Southeast. “We focus on hyper-scale data centers interconnected by long-haul fiber networks,” Uphues stated, citing examples such as a cable landing station in Burlington, South Carolina, connected via 465 miles of fiber to Atlanta.