The AI-Ready Cable Landing Station is Coming

The AI-Ready Cable Landing Station is Coming

NJFX Expansion Will Bring Liquid-Cooled GPU Clusters to its Jersey Shore Subsea Hub

Written and published by Rich Miller | Data Center Richness

For many years, data center growth followed the network, with the largest clusters in major business markets. With the rise of AI, data center development has followed the power, seeking ample land and electricity to support massive campuses.

Gil Santaliz believes there are instances where AI will need to once again follow the network – all the way to the subsea cable landing.

Santaliz is the CEO of NJFX, a Tier III colocation facility and cable landing station in Wall Township, N.J. Last week NJFX announced an expansion that will enable it to house liquid-cooled GPU clusters.

NJFX says the new data hall, called Project Cool Water, will be the first purpose-built cable landing station campus in North America to support liquid-to-the-chip AI-ready infrastructure.

“The vision for NJFX has always been to support U.S. critical infrastructure with purpose-built assets that matter to our global economy,” said Santaliz, who believes having AI infrastructure at subsea cables can support strategic use cases in data sovereignty, security and network management.

“This new design ensures that subsea cables and global network carriers can continue to scale – now with an advanced data hall engineered for the AI era,” he added. “Our technical and security teams, working with federal, state, and local partners, remain committed to supporting the critical infrastructure of the United States.”

The new 10 megawatt high-density AI data hall at NJFX will feature 8 megawatts of IT load at an expected PUE of 1.25. The company announced the expansion after executing a load letter with utility JCP&L (supported by a $3 million deposit) targeting power delivery by the end of 2026.

Why AI at the Cable Landing Station?

Much of the current growth in AI is focused on GPU capacity for training and refining large language models (LLMS) and related applications. But use cases are now shifting to inference, where AI applies what it has already learned to generate answers, analysis and content.

The growth of inference creates more opportunities for strategic deployments where geography matters, along with proximity to networks and data stores

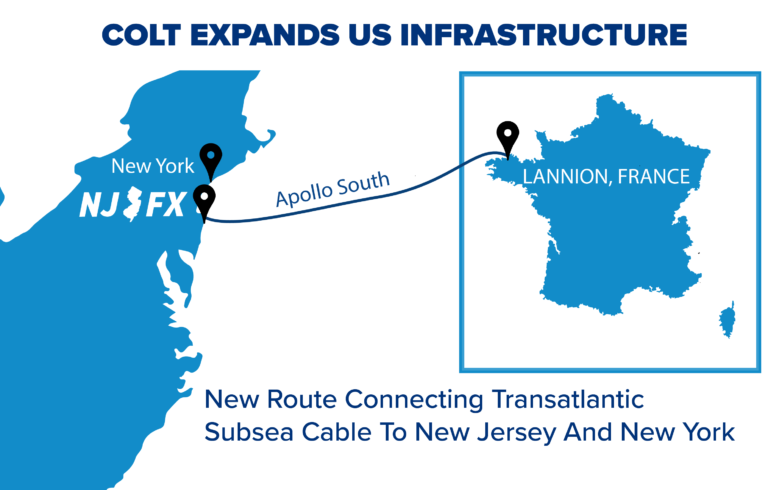

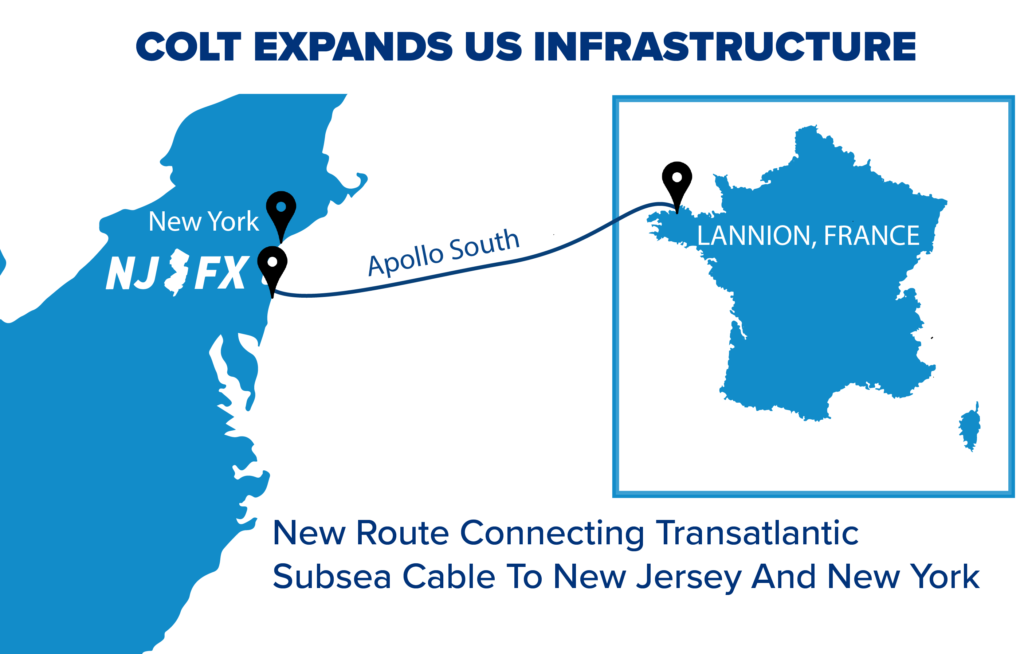

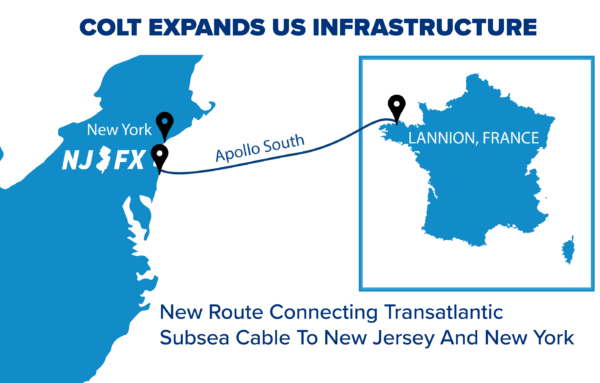

The NJFX campus hosts four subsea cables linking North America to Europe and South America and is located within 7 milliseconds of more than 100 million U.S. residents. With over 35 active network operators on-site, NJFX enables inference-ready interconnection for the next generation of AI and Generative AI workloads.

“Any network manager in the world will want to have access to intelligence to plan and optimize and manage your networks for security,” said Santaliz. “Governments are going to care about this, because you’ve got data sovereignty issues.

“A cable landing station is the first and last stop in a continent,” he added. “We’re North America’s home for four subsea cables connecting Europe and South America. Some companies don’t want specific data to leave the country.”

As global data flows get bigger and faster, it will require more computing power to analyze for companies and governments to manage.

“The larger the network, the more complicated it gets,” said Santaliz. “AI in your network will become more common as time goes on.”

The Evolution of Subsea Infrastructure

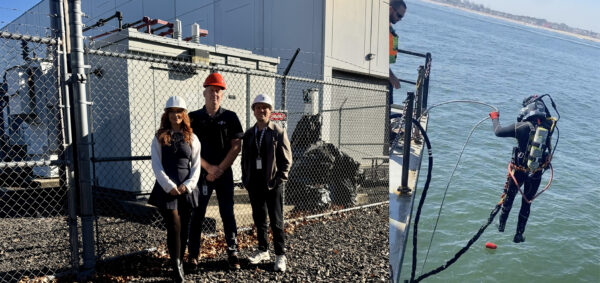

Until fairly recently, cable landing sites featured minimal infrastructure, perhaps a manhole near the beach where they come ashore and sometimes a small facility operated by the phone company or cable owner. From there, fiber routes carry the data to carrier hotels in major cities like New York or Los Angeles.

The subsea cables themselves were typically funded by consortiums of telecom carriers, due to the major expense of building fiber optic cabling under the ocean.

Around 2015, hyperscale operators became the largest investors in subsea cables, and began to redraw global fiber routes, seeking landings adjacent to strategic data center campuses.

Landing stations were changing as well, featuring more infrastructure. NJFX was perhaps the boldest move in this direction, building a 64,000 square foot, Tier III data center next to a cable landing station operated by Tata Communications. The facility is about a mile from the ocean.

“The cable landing station is no longer just a network access point – it’s becoming the infrastructure core for tomorrow’s digital economy,” said Santaliz. “Our investment in high-density infrastructure and power resiliency reflects our commitment to meeting customer demands today, while preparing for the explosive growth of AI-driven infrastructure ahead.”

The hyperscale shift created new cable landings in Virginia Beach and Myrtle Beach along the Atlantic Coast, which also feature commercial colocation space. It also expands subsea cable connections beyond the large metro carrier hotels, which handle incoming subsea traffic in cities like New York, Miami, Los Angeles or Seattle.

“I just want the industry to understand that it’s important that we have carrier hotels, but their evolution doesn’t match up with AI,” said Santaliz. “You’re not going to do liquid cooling in a carrier hotel, right? Most of those are office buildings – multi-story, multi-tenant buildings with windows. They don’t have the floor loads for liquid cooling. You need a purpose-built facility to do liquid-cooled GPUs.”

The NJFX Story

Santaliz was previously the CEO and founder of metro fiber provider 4Connections, which was acquired in 2008. Santaliz explored several opportunities in the data center business, and believed cable landings would be an emerging focus for companies seeking to move oceans of data and content.

The NJFX campus sites 64 feet above sea level in Wall Township on the Jersey Shore, between Asbury Park and Point Pleasant. As its business has grown, NJFX has built out manhole systems to connect to fiber routes along the Garden State Parkway and beyond. For Santaliz, AI represented the next business evolution.

“AI needs a lot of power, and I thought ‘we can do more power.’ (NJFX is ) not a really big building, but the technology density means we don’t need that much space. We need a really hard surface that’s going to withstand 10,000 pounds per rack, with the ability to run water to them and support high density. We have 10,000 square feet of structured slab with drains where we could deploy high-density liquid cooling.

So we started down this journey about a year and a half ago, working with our local utility.”

AI and power is a sensitive issue in many communities, especially if power expansions require new infrastructure. On that front, the NJFX team benefitted from good planning.

“When Tyco originally picked the location, they gave an easement to JCP&L to put their substation on our property. We agreed to pay them to upgrade and add new transformers. So we’ve got a 25-megawatt transformer coming in, and we’re going to take 12 megawatts,” with the balance going to JCP&L customers.

“Our partnership with JCP&L allows us to have better infrastructure in Monmouth County. It’s more capacity, and is going to provide our community better resilience for their homes in Monmouth County.”

The Road Ahead

As NJFX VP of Operations Ryan Imkemeier and his team make preparations for the new space, Santaliz says the concept is drawing interest.

“Our goal is to work with a customer to finalize their requirements,” said Santaliz, noting that many elements of new AI space will be customized to the customer’s requirements. “But we’re now in active conversation with several different prospects that have an interest.”

Latest News & Updates

Stay informed with the latest press releases, industry news, and more.

The AI-Ready Cable Landing Station is Coming Read More »